How Generative AI is Rewriting Key Aspects of Production Workflows

By: Sahil Lulla and Rachel Jobin

As AI tools reshape every corner of the filmmaking process, the Entertainment Technology Center (ETC) with support from Topaz Labs has been investigating how these technologies are redefining critical aspects of preproduction, postproduction, and cross-departmental alignment throughout the production pipeline. Some of these key shifts are granular aspects of the production pipeline, like how generative AI VFX plates must be upscaled before being integrated into the the VFX pipeline, unlike traditional workflows, whereas others represent broader changes relating to the way in which stylistic consistency is defined and maintained throughout a production. This article explores some how the ETC implemented these key changes on The Bends.

Upscaling Isn’t a Button—It’s a Workflow

There is a pervasive misconception that AI can instantly and cleanly upscale any footage to cinematic quality. While many AI upscaling tools exist, a studio-grade AI upscaling workflow is far more complex than a simple push of a button.

Traditional visual effects pipelines begin with high-fidelity 16- or 32-bit EXR files captured in linear color. These files are downsampled as the project progresses through post-production, resulting in a 2K–4K DCP, 8-bit SDR, or 10/12-bit HDR, depending on delivery specifications.

AI production inverts this process. Most generative models start from fragile 8-bit SDR outputs with gamma already baked in. But these 8-bit SDR files cannot simply be upscaled into cinema-ready plates via upscaling software. Any noise or artifacts baked into the source would be amplified by upscaling the source file, and these artifacts would be carried through every downstream step in the VFX workflow.

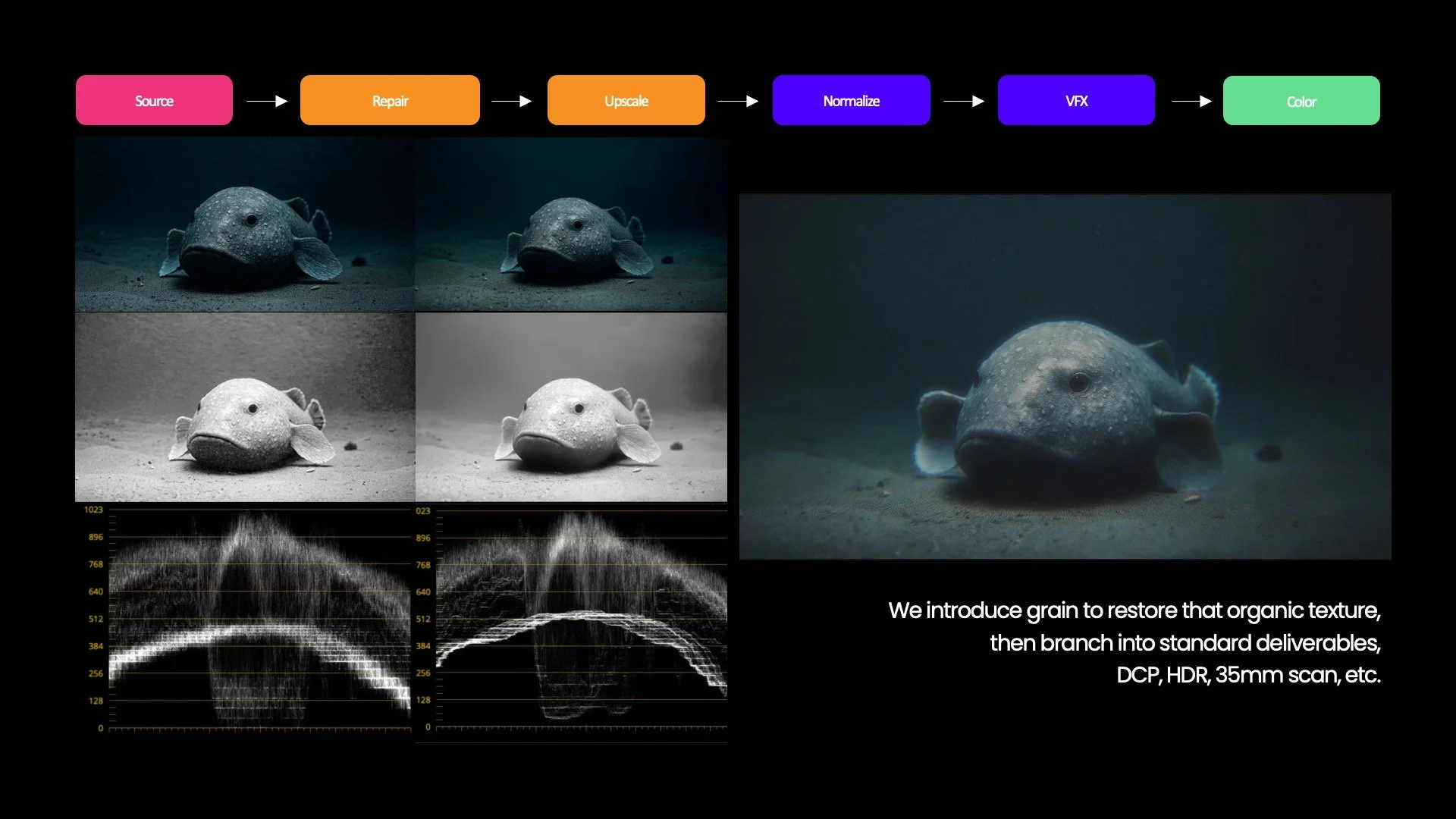

A traditional upscaling workflow compared to a gen AI workflow.

The ETC’s approach on The Bends combined traditional upscaling techniques with AI-assisted workflows in what the team called gen-AI–assisted plate restoration. The process began with Topaz Labs’ denoise model, Nyx, to remove compression artifacts from the 8-bit plates. These “restored” plates were then upscaled to 4K, 32-bit EXR using Topaz Labs’ Gaia High Quality (HQ) model and handed off to traditional VFX and DI. This hybrid plate recovery pipeline allows AI footage to behave like any other VFX plate.

Once the plate is upscaled, grain is reintroduced to restore organic texture, and additional methods are applied to mask any residual artifacts. Film emulation can assist in removing banding, but only after the AI output has been structurally cleaned. The result is then converted to standard delivery formats such as DCP or HDR.

A more conventional method employs inverse tone mapping, in which 8-bit plates are mapped up to HDR using data derived from deep learning and color science. This technique excels in plate matching between AI-generated and live-action photography, supporting a more classical grading workflow.

An AI-native approach would involve training a custom video model (or fine-tuning an existing one) to map SDR inputs to HDR outputs or even generate HDR natively. That kind of setup takes a lot of paired data, research, and compute to scale broadly, similar to what Luma Labs recently demonstrated. The ETC is currently exploring the feasibility of these two approaches.

Ultimately, upscaling AI footage is a pipeline design challenge, not a plug-in. Each step made when upscaling or otherwise preparing footage for postproduction has cascading effects on color, texture, and motion later in post. And while tools exist for individual tasks such as denoising, upscaling, and grading, the overall pipeline remains fragmented. The ongoing challenge is integrating these discrete tools into a cohesive, project-specific workflow.

Comparison of an 8-bit SDR plate before and after removal of compression artifacts. The final image has grain added back in.

Pre-Production in the Age of Generative AI

How does a generative AI production ensure that post-production and VFX remain aligned downstream? The answer lies in establishing stylistic consistency early in pre-production through the use of LoRAs (Low-Rank Adaptation).

In traditional pre-production, the creative DNA of a project begins with lookbooks, storyboards, and lens tests. These elements remain essential in AI-driven filmmaking, but their role has slightly shifted. The creative locus of AI pre-production is now LoRA creation, prompt engineering, and context engineering, each driven by the aesthetic framework established by elements such as lookbooks, storyboards, and lens tests.

LoRAs function as digital style libraries or lens kits, trained on curated imagery to reproduce specific aesthetics or lighting behaviors. Developing LoRAs is an iterative process: imperfect models are refined by combining their strongest versions or by stacking multiple LoRAs with varying weightings to achieve nuanced control.

This iterative process also helps develop a taxonomy of style tags, such as the lighting treatment or composition rules for a specific scene, allowing artists to recombine looks across shots. When paired with clip-based quality control and project-specific embedding libraries, this approach builds data consistency throughout the creative pipeline.

In effect, LoRAs and prompts replace lenses and LUTs as the new grammar of cinematic design. Learn more about how LoRAs were used to achieve stylistic consistency on The Bends [in this post].

AI as a Statistical Renderer—Not a Physics Engine

Once stylistic parameters are defined, understanding how AI interprets motion and realism becomes essential. Another key shift introduced by generative AI workflows lies in how filmmakers conceptualize simulation. AI video models often appear to mimic physical realism. Visual elements like a character’s hair blowing in the wind or of water splashing onto a beach would normally be generated using a physics engine like Houdini, but generative AI isn’t a physics engine.

Instead, generative AI systems perform probabilistic rendering, predicting pixels based on learned statistical patterns rather than simulating physical forces. Recognizing this distinction helps artists anticipate how AI models behave and determine where to apply traditional simulation tools for greater control. For as powerful as generative AI is, sometimes a physics engine is necessary to get certain movements to look realistic.

Understanding generative AI as a statistical renderer also points toward the next frontier: world models. Emerging systems such as Google’s Genie 3 promise to integrate tone, physics rules, and visual DNA into unified environments that maintain internal consistency across shots. Whereas today’s workflows resemble a patchwork of nodes, world models could consolidate these functions into a single coherent ecosystem, streamlining the entire AI pipeline.

A Collaborative Future for AI-Driven Filmmaking

Generative AI workflows blur traditional boundaries between departments. What begins in pre-production can directly influence upscaling, color, and compositing decisions downstream.

Success depends on early collaboration across technical and creative domains, and on understanding AI models not as “magic boxes,” but as probabilistic systems that expand creative potential through data-driven design.

AI isn’t replacing artists or engineers; it’s refactoring skill sets and breaking down silos. When creatives and technologists collaborate from the earliest stages, AI becomes not a shortcut, but a catalyst for new forms of artistic expression.